7 Challenges of Industrial IoT Streaming Analytics – Guide to Success

This blog is co-authored by Nacho Lafuente, Founder & CEO, Datumize.

The world of connected devices is growing at such a fast pace that by the end of 2020, there will be more devices than human beings on Earth (Cisco). Many devices have poor-to-limited connectivity, while many others provide closed or proprietary data formats. The challenge of integrating data with multiple consumers and usages is a classic data integration problem, very well solved within on-premises data centers; however, the Internet of Things (IoT) is highly distributed and heterogeneous by nature, and this fact imposes clear limitations to the techniques for data collection and streaming into higher layers of processing and analytical understanding. These challenges would be amplified in industrial IoT (IIoT) implementations, where the data comes from thousands of IoT devices. This article explores seven challenges and solutions for end-to-end distributed data collection and stream processing for successful streaming analytics.

Challenge #1: Provisioning data collection to disparate data sources and devices

We all like diversity for humans; it’s intrinsic to our nature, and many companies nowadays actively promote their teams’ diversity for more imaginative outcomes. The IoT world, however, would be better with less diversity. There are thousands of different platforms that provide support for IoT devices, such as built-in firmware in simple devices (e.g., lighting), firmware in ultra-expensive devices (e.g., healthcare ventilators), programmable open-source (e.g., Arduino), proprietary industrial clouds, or small IoT computers.

Many innovations, such as advanced analytics and artificial intelligence (AI) projects, fail because data capture is neglected. Often, the assumption is that data “will be there” waiting to be picked up. The reality is that you must get your hands dirty to get the data, especially from the field.

There are multiple ways to interact with the zillion different devices generating data out there. Most of the time, however, due to security restrictions, design decisions, or vendor lock-in, you must be close to the device to capture useful data. Close means living in the same local network, or physically wired (do you remember serial ports?). This challenge is related to the ability of provisioning your data capture agents in the right location without much hassle.

A piece of software provisioned in a remote location needs to comply to security, not only for initial installation but for periodic maintenance. This process must be automated to deal with environments that might span hundreds or thousands of devices.

Challenge #2: Data drift

Data drift refers to the steady changes to data format and semantics over time. Changes can be introduced without notice, probably the worst scenario. Changes can also be introduced with partial notice; for example, changes to data format are described, but leave out significant changes in the semantics of missing and default fields.

In any case, data collection needs to be aware somehow about this drift to avoid streaming out the garbage. There are some strategies to deal with the challenge of data drift:

- Ignore the problem, either because you can’t solve it or you’re completely unaware. The data being streamed will be of low quality. This strategy is not recommended!

- Detect that data format or semantics have been altered, stop data ingestion, and report the issue. This is about playing on the safe side. You don’t stream poor quality data basically because you don’t stream anything.

- The best effort is a pragmatic approach that recognizes the complexity of the challenge and tries to stream as much quality data as possible without breaking the streaming. Usually, you would expect that missing fields are ignored (and reported), while fields with an unexpected format are informed as-is (and reported).

- Automatic learning is far more sophisticated and consists of learning how to fix issues when data drift occurs. Imagine an amount that used to be expressed in a certain currency (default assumption) and suddenly the same field reports number and currency name. Automatic learning applied to data drift will detect this, report, and fix so that the number parsing won’t fail again.

Data drift if one of the main causes of poor data quality and is especially relevant because it happens on the source. Unless there are strict auditing capabilities in place, it’s very hard to tell if data is correct. The direct implication is that the streaming process can be eventually interrupted or, even worse, be nurtured with incorrect data.

Challenge #3: The network is not always available

We live in a world that mostly assumes “always-on” Internet connectivity. But many of us were born in a world without the Internet and phone landlines. Definitely, we are in a better position to understand that many scenarios can’t afford uninterrupted connectivity to the Internet.

Maritime operations are an excellent example. Ships, submarines, oil and gas rigs, and offshore windmills are examples of assets that navigate or remain isolated for long periods, and connectivity is limited and expensive, usually based on satellites. Operations onboard can’t depend on connectivity to an inland data center. The usual approaches for monitoring, such as capturing massive amounts of metrics and triggering alerts on the server, are no longer valid. However, you don’t want to throw away such valuable data.

The tradeoff here is to recognize that disconnections will occur, and your data collection and streaming must be prepared to “store and forward.” While collecting data, some metrics will be consumed and used locally, while others will be temporarily buffered until you dock in a harbor, and the ship connects to high-speed networking or your offshore installation gets its slot to wisely use the satellite.

Store and forward strategies are often related to queuing theory and yield a number of interesting questions regarding data streaming. If you have connectivity now and then, and you have buffered data for the last 10 minutes, what happens if connectivity is back for 30 seconds? Should you prioritize fresh data streaming vs. older buffered data? Or should you interlace fresh and buffered data?

Challenge #4: You don’t have unlimited network bandwidth

One of the key assumptions of big data is that you can cope with extreme data volumes because of inexpensive storage and technology that scales well. And this is partially true within on-premises data centers. People who have undertaken a serious big data project will probably understand the importance of considering network bandwidth as a limitation. It’s not that complex to saturate internal routers while doing byte transfers into your data lake; it is relatively simple to saturate a company’s Internet bandwidth if you’re doing byte transfers back and forth with your private cloud.

Things get even worse out in the field. Satellite communications still exist. Many shops and branches still run on ADSL connectivity. And devices powered on batteries leverage low-power protocols such as Zigbee. It seems fairly obvious that you must separate the key data from the rest, and this needs to be done in situ: on the edge. Only a smart, distributed, on-the-edge agent will be able to capture the data next to the source, do some local processing for dissociation, enrichment, and transformation, and finally stream the resulting valuable pieces into your data center platform.

Techniques like data differencing (just transmitting the changes) or data compression are prevalent to save bandwidth.

Challenge #5: Scalable processing of streaming data in the data center

After a series of vicissitudes, streamed data arrives into the data center. Scalability usually concerns people when they’re discussing huge workloads: your technology must be designed to support varying volumes and ingestion rates. However, the current COVID-19 scenario reminds us of the importance of having the ability to scale down your system to decrease costs based on business demand.

There are three types of scalability aspects that need to be considered for streaming data.

- First is the ability to scale up and scale down your compute to so that you can consume what you need. Open source technologies like Apache Spark provide unparalleled horizontal scaling capabilities so that customers can scale their processing to the needs of their use cases. Also, cloud vendors provide the ability to scale up and scale down compute clusters based on the demand.

- Second is the ability to connect to a variety of streaming sources and targets using out-of-the-box versatile connectivity

- Third is the ability to parse, enrich, and apply complex transformations on the streaming data as it goes through the pipeline. This factor is very important since the streaming data in its raw form is not very useful for various analytics. Customers usually would like to apply complex transformation techniques like windowing, merging streams, aggregation of streams, lookup to enrich the data, as well as data quality techniques on streaming data.

There are two reasons why these aspects need to be addressed while looking to process streaming data.

- Without out-of-the-box capabilities for connectivity and complex transformations, you will need to create and maintain expensive hand coding for these operations. This results in significant cost overruns in the long run.

- Big data and cloud are going through rapid changes in technology, and the technology choice of today may not be relevant tomorrow. You need a future-proof solution for addressing your stream processing.

Challenge #6: Timely reaction upon operational events

One of the main business reasons customers implement streaming technology is so they can be proactive, not reactive, with respect to customer experience, operational efficiency, and business process automation. This is where the real value of streaming is and can have a positive effect on topline and bottom line.

Some of the most common use cases for operationalizing actions on streaming data are:

- Triggering targeted real-time campaigns based on customer behavior and purchase patterns

- Providing next-best offers to customers based on purchases they are making right now

- Acting on the stress signals coming from IoT and sensors before it is too late by triggering disaster management processes

- Improving customer experience by detecting fraudulent transactions or anomalous behavior and informing customers in real time by operationalizing AI and machine learning (ML) algorithms on transactional data

It is important to operationalize actions on streaming data so that you can take advantage of your investments in streaming technologies.

Challenge #7: End-to-end data governance on streaming data

Many organizations want to understand how data traverses through their systems from the source onto target and how the data gets transformed before it gets used. This is required for auditing purposes and also to ensure that your reports and dashboards use trusted data. Hence, it is essential to have data governance solutions in place to address the lineage and governance of streaming data from end to end.

However, streaming data imposes several stress factors over the governance process due to the real-time and heterogeneous nature of the streaming itself. Hence, it is essential that data governance solutions are in place to address the lineage and governance of streaming data from end to end. All new data sources must be compliant with corporate data governance to avoid the usual pitfalls. You should not skip corporate policies just because something (e.g., your valuable streaming data source) is new to the company.

The Informatica and Datumize Solution

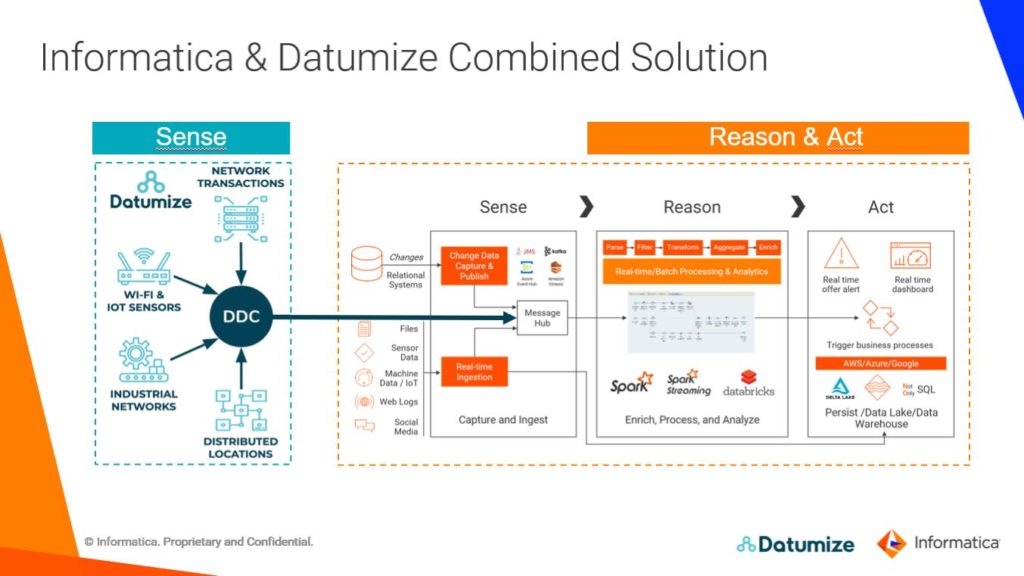

Informatica Data Engineering Streaming uses the Sense-Reason-Act framework to help data engineers ingest, analyze, and act on the broadest range of real-time streaming data and make decisions while events are moving through the pipe. The framework helps customers to extract value from fast-moving continuous data streams.

For Datumize, Informatica Data Engineering Streaming (DES) represents a powerful, sophisticated and enterprise-ready solution to unleash the value of data captured with Datumize Data Collector to sense, reason, and act on trusted data, all within the context of dynamically changing information, business rules, and analytic models.

For Informatica, Datumize acts as a powerful data ingestion engine that can capture data from sophisticated dark data sources, process them at the edge, and ingest this data in real-time into Informatica Data Engineering Streaming.

The Datumize and Informatica Solution, as illustrated below, is a proven solution where Datumize specializes in capturing dark data (Sense) and streams this data into Informatica Data Engineering Streaming (Reason & Act) for downstream real-time processing, integration, and streaming analytics.

The combined Informatica and Datumize solution brings specific advantages for end-to-end IoT data streaming data management:

- Datumize collects and leverages dark data, especially from IoT, industrial IoT, and streaming sources such as sensors, Wi-Fi, industrial equipment, control and automation systems, on-the-edge devices, affiliated/distributed locations, and network transactions.

- Datumize Data Collector (DDC) is specifically designed to be provisioned in remote, heterogeneous platforms and operating systems, and collect data from disparate data sources and devices.

- The solution offers a scalable method for handling huge volumes of data with variety, both on-premises and cloud-based. Support for data drift is available both on the edge, in Datumize Data Collector, and Informatica Data Engineering Streaming. This allows for no disruptions to the streamed data.

- The real world requires advanced data collection functionality that is network-aware, such as store and forward, streaming compression, or obfuscation. Datumize Data Collector can provide such functionality when data is ingested into Informatica Data Engineering Streaming.

- Scalable processing of streaming data is an essential aspect of end-to-end streaming data management. Informatica Data Engineering Streaming provides out-of-the-box connectivity to a variety of streaming sources and targets along with a rich set of out-of-the-box transformations for parsing and enriching the data, including merge, aggregate, lookup, and data quality transformations. Also, it pushes down all the complex transformations on streaming data to Apache Spark for massive horizontal scaling.

- Operationalizing actions on the streaming data helps customers take advantage of their investment in streaming technologies to improve their revenues or optimize costs. Data Engineering Streaming helps customers operationalize business rules, machine learning models, and also trigger business processes on the streaming data as the data is going through the pipe.

- End-to-end lineage for streaming data flows for governance and auditing is a crucial ask from customers. Informatica Enterprise Data Catalog provides complete lineage for the streaming data flows that help customers to manage the streaming pipelines by adhering to established governance principles within the organization.

Next Steps

- Visit the Datumize Data Collector webpage

- Read the solution brief: Real-Time Streaming Analytics for Dark Data Sources

- Watch the on-demand webinar: Next-Gen Customer Experience With Real-Time Streaming Analytics and IoT