A(I) Magical Leap to Trust

No two days are ever the same for our UX team, but not a single day passes without at least one discussion about AI-enabled experiences, whether we’re comparing the advantages of using a chatbot as a personal assistant for our customers, prototyping what happens when we add CLAIRE -driven recommendations to enhance users’ daily tasks, or even applying Natural Language Processing (NLP) technology for a simple and easy creation of business rules. AI, NLP, chatbot and the like are now intertwined in my daily lingo. But this was not always the case.

My first week as a member of the product development team at Informatica was a blur of information, applications, and acronyms to learn and memorize, but it also marked a pinnacle point in my career as a UX designer. One morning, my colleague Marisa Minuchin, who heads one of our development groups, approached me and said, “I want to show you something we are working on—it’s an algorithm that knows how to take unstructured data and create a meaningful structure from it.” Intrigued, I followed her to her office.

Marisa browsed a log file that seemed like gibberish to me. Nothing made sense until she ran the algorithm. The black screen filled with lines that kept changing in front of me (‘It’s thinking,’ I thought to myself). After a few seconds, a tree was displayed, a structure. She walked me through the results: “You see, this is a first name, that’s a second name, an IP address, and some sort of ID.”

Marisa continued with her demonstration, and this time she pulled an even bigger file. This file appeared to be a collection of unrelated data. Again, the algorithm worked its magic and created structure out of the unknown. “Look,” she exclaimed, “it can learn,” as she pointed out how this magical algorithm can guess that a string is actually a first name, based on the content it had processed earlier. It was incredible! I remember feeling as though I had just witnessed a miracle.

Next, she turned to me and asked, “How would you like to create a UI for this algorithm?” The smile on my face said it all.

Reflecting back on my initial UI designs, it's clear I had fun creating the concepts. Since we wanted our users to have a captivating experience, the team and I tried to infuse the UX with gamification elements. This was an amazing invention, and we wanted to share it with the world.

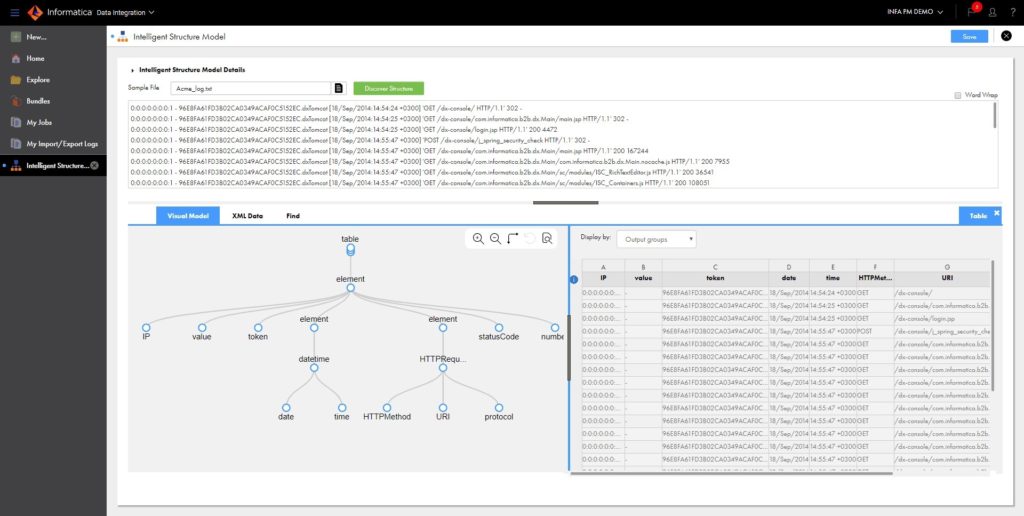

Six years later, this tool has evolved and matured into the integral part of the cloud offering at Informatica known today as Intelligent Structure Discovery, or ISD. Our technology has gone from something magical to a tool that shapes and influences users’ interactions and expectations as they aspire to understand how and why this algorithm chooses a certain path, as well as strive to impact and change the outcome, select a different path, and define not only which data the algorithm is using, but how much data, and more.

But the additional capabilities and acceptance of this technology are also met with increased expectations. For one thing, black boxes and magic are no longer enough: our users now require algorithmic transparency. To meet these changing standards and address our users’ needs, we must consider the principle of Trust when designing AI-based systems.

UX for AI design principle: Trust

Humans are more likely to forgive each other than to forgive machines and experience imparts that AI systems can upset people, cause mistrust and, as a result, become redundant. If we wish our users to use an AI-enabled system, then our design should enable users to trust the system.

Following are a few examples of how we achieve this with CLAIRE:

- Research shows that users tend to trust other users’ experience. So, whenever possible, we design the system to display usage information.

- Research shows that users trust their own experience. For example, if our user utilized a certain capability in the past, the UI should make that capability apparent to her.

- Adapt system flows according to users’ actions. By showing clear cause-and-effect relationships between user actions and system outputs, you can help users develop the right level of trust over time.

- Clearly frame the sources of the data. Sharing which data is being used in the AI prediction not only reduces the potential for pitfalls and mistrust about context and privacy but delivers greater insight for users as to when and where to apply their judgment.

- Show the certainty with which a recommendation is made. When a system recommendation is displayed with a certain level of confidence, it allows users to determine whether or not they wish to use a said recommendation.

- Trust is volatile and can change during the workflow. Trust is dependent on many factors, such as the persona, the user’s goal, or the type of action. Trust must be managed over time, as it’s not a single occurrence. Likewise, mistrust requires management too.

In the next blog posts, we will explore additional AI design principles that need to be taken into account when designing AI-enabled products. Principles such as Clarify, Simplify, Control and Humanize provide guidance against which we can measure our design choices.

Want to know more?

If you’re wondering about how the machine learning-based innovations in CLAIRE are driving a big leap in data productivity, you can read this white paper. And you can learn more about Intelligent Structure Discovery here.