Why Data Quality is Critical for Your Data Management Initiatives

Hot on the heels of the Gartner® Magic Quadrant™ for Data Quality Solutions, Gartner has just released the 2021 Critical Capabilities for Data Quality Solutions1.

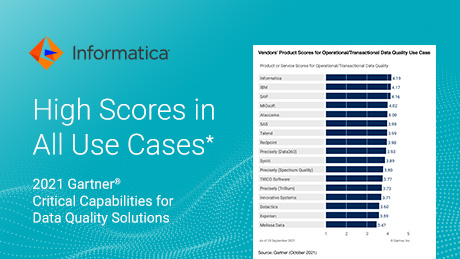

We are proud to be recognized with the highest scores for the following use cases as of September 20th:

- Data Integration (4.20 out of 5)

- Data Migration (4.22 out of 5)

- Operational/Transactional Data Quality (4.19 out of 5)

- AI and Machine Learning (4.23 out of 5 – tied)

In addition, for all other Use Cases we scored 4.0 or higher out of 5: Analytics and Data Science (4.17 out of 5); D&A Governance Initiatives (4.06 out of 5); Master Data Management (4.12 out of 5). We believe this is a testament to Informatica’s continued innovation in our Intelligent Data Management Cloud (IDMC) platform, enabling us to achieve autonomous data management for our customers.

Let’s look at the role data quality plays in the following use cases.

Data Integration and Operational/Transactional Data Quality: As organizations continue their digital transformation journeys, many are not achieving the benefits expected. With an increased reliance on automated business processes, tighter regulation and ongoing competitive pressure are forcing organizations to focus on the data underlying these processes.

So, what does this mean? To support digital transformation goals, all corporate data needs to be universally accessible, certifiably accurate, and reusable. Organizations need to know more about what is in their source systems, and they need to be able to integrate data from multiple systems into new, more productive, data-intensive applications. They need to be able to cleanse and enhance data, as well as monitor and manage the quality of data as it is used in different applications such as customer relationship management, enterprise resource planning, and business analytics.

Analyzing and profiling the data before data integration are essential steps in the planning process and dramatically speed up the development of data integration workflows and mappings. This initial profiling helps organizations to identify and understand their source data. Ultimately, it enables them to deliver data that is comprehensive, consistent, relevant, fit-for-purpose, and timely to the business regardless of its application, use, or origin to reconcile the source data and the target systems.

Data Migrations: A saying that springs to mind when I think about data migrations is, “Measure twice, cut once.” Although the origin of this saying relates to cutting timber, this approach also applies to data migration. Ask yourself: Would you move data to a new application without checking if it was in the right shape, size, and format? Wouldn’t you verify whether any data was missing? Wouldn’t it make sense to confirm whether you have the same data multiple times?

Unfortunately, I have seen too many organizations just move data without first focusing on data quality. This can lead to project delays, cost overruns, security breaches, and low adoption of the new applications.

Data migrations are also a major opportunity to add new value to the data. For example, with data enrichment, you can append geocodes or demographic data, making the data more useful in analytics and AI and machine learning (ML) projects.

AI and Machine Learning: Business are excited by the promise of AI and ML. And rightly so. AI and ML are hugely disruptive forces in many areas, from context-aware marketing to fraud detection to early diagnosis of chronic conditions.

And at the heart of all these AI and ML projects is data. That is why data quality is critical to this use case. In my previous blog, I referenced a Google Research paper that states, “Data quality carries an elevated significance in high-stakes AI due to its heightened downstream impact, impacting predictions like cancer detection, wildlife poaching, and loan allocations.”2

However, capturing, preparing, and maintaining high-quality data for AI and ML is not easy. The data needed to train models comes from many different sources, in multiple formats and in many cases, delivered in huge volumes. And this can create a number of data quality issues that impact the AI and ML models, which can be classified using the following data quality dimensions:

- Completeness – What data is missing or unusable?

- Conformity – What data is stored in a non-standard format?

- Consistency – What data gives conflicting information?

- Accuracy – What data is incorrect or out of date?

- Duplication – What data records are duplicated?

- Integrity – What data is missing important relationship linkages?

- Range – What scores, values, calculations are outside of range?

- Data Labelling – Is the data labelled with the correct metadata?

This is not an exhaustive list, but these are the dimensions most widely used with AI and ML models that I have seen. Other dimensions that customers have used include exactness, reliability, timeliness, continuity, and more.

In addition, resolving the data quality problems across multiple use cases can present a significant challenge if you are not using the right solution. With Informatica Data Quality solutions, you can ensure your data is fit for purpose in four easy steps for all these use cases:

- Discovery. Find all the data impacted by the migrated systems. This is essential during the migration process, but equally valuable after it.

- Profiling. Understand the completeness, conformity, and consistency of your data and prioritizes remediations.

- Standardization and Cleansing. Define and apply data quality rules, at scale with and automated, standardized approach to data quality, across all the assets being migrated

- Deduplicate. Identify duplicate data that exists in or across current systems and ensure you only migrate the most relevant and correct data.

Data Quality for Everyone, Everywhere

Our ongoing strategy of “Data Quality for Everyone, Everywhere” relies on the core principles of simplicity, productivity, and scale. This approach pays testament to us being recognized in the Gartner 2021 Critical Capabilities for Data Quality Solutions report.

We continue to expand our offerings on the Intelligent Data Management Cloud (IDMC) platform. Informatica IDMC extends our core capabilities with extensive and deep product integrations to new cloud-based models. Unlike many other data management solutions, Informatica’s are built on an industry-leading, microservices-based scalable architecture, as opposed to simply containerizing and hosting existing applications. This allows us to build truly scalable and interoperable cloud-based capabilities that will grow and extend to future use cases, loads, interaction models, and more that our customers expect, now and in the future.

If you are looking for a single data quality solution with the breadth and depth of capabilities needed for all your data management needs, look no further than Informatica Data Quality.

1Gartner 2021 Critical Capabilities for Data Quality Solutions, October 11, 2021, Ankush Jain, Melody Chien

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

*Informatica has the second highest score in Analytics and Data Science Use Case, D&A Governance Initiatives Use Case, Master Data Management Use Case

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

Gartner is a registered trademark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

2Nithya Sambasivan, Shivani Kapania, Hannah Highfill, Diana Akrong, Praveen Paritosh, Lora Aroyo for Google Research (2021), “Everyone wants to do the model work, not the data work”: Data Cascades in High-Stakes AI