5 Steps to Building a Data Lake with Informatica Big Data Management on Azure

Last Published: Jan 12, 2022 |

Big data is the underpinning of how companies are revolutionizing customer engagement, driving productivity and fueling innovation. It has emerged as a megatrend that is continuing to gain attention.

Big data started with Hadoop. Yahoo! and Google used Hadoop as a simple, focused product designed to index web pages for search. Today big data is not just Hadoop; it consists of many other technologies as well. Spark is replacing the batch processor MapReduce as the default execution engine in Hadoop, which opens new and more complex use cases. From HBase to Cassandra, YARN to Docker containers, and Mesos or Kubernetes, Flume- Kafka, HDFS-S3, big data technologies keep changing.

The future of big data is cloud. There are many benefits moving toward cloud such as, shifting costs from capital to operating budgets, time to market, flexibility, scalability and the ability to have separation of storage and compute, to name a few. Microsoft Azure is a leader in big data in the cloud computing space. It provides Software as a Service (SaaS), Platform as a Service (PaaS) and Infrastructure as a Service (IaaS) solutions, and it supports many different programming languages, tools and frameworks, including Microsoft-specific and third-party software and systems.

Creating a Data Lake solution on Microsoft Azure

Enterprise data warehouses (EDWs) have been many organizations’ primary mechanism for performing complex business analytics, reporting, and operations. An EDW is good for all such use cases. However, the main limitation of an EDW is the response time on rapid changes. Even a small change could require 3-6 months to implement.

Data lakes are good in adopting to rapid changes & innovation like managing new data types and answering new types of analytics questions. Often data lakes augment an existing EDW, by delivering an environment that allows for rapid change, ad hoc questions and innovation.

As a result, almost every industry has a potential data lake use case. Organizations can use data lakes to get better visibility into data, eliminate data silos and capture 360-degree views of customers.

Informatica Big Data Management (BDM) provides integrated data management solutions to quickly and holistically integrate, govern, and secure big data for your business. It fully supports the Microsoft Azure ecosystem as well. There is no need for you to buy disparate technologies and integrate those yourself. We’ve done that for you. So, you can spend time delivering actionable insights for all your data.

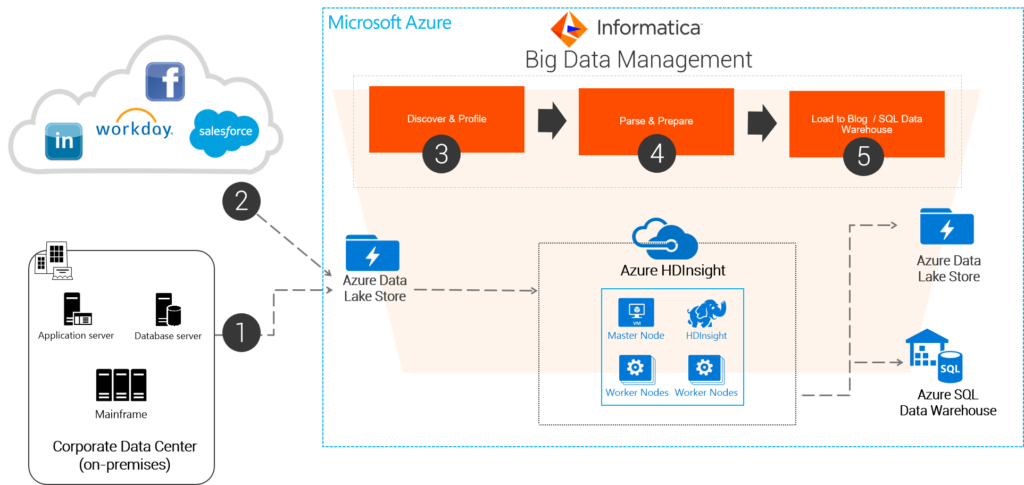

Informatica BDM together with Microsoft Azure can help solve your data lake use case on Azure. The following image shows how we see a typical customer implementing a data lake solution using Informatica Big Data Management on Azure:

Step 1: Start by collecting and moving your data from on-premises systems into Microsoft Azure Data Lake Store (ADLS). You may also consider offloading infrequently used data and batch load raw data to a defined landing zone into ADLS. Note: we also see a few customers use Azure blob for this. This frees up space in the current enterprise data warehouse.

Step 1: Start by collecting and moving your data from on-premises systems into Microsoft Azure Data Lake Store (ADLS). You may also consider offloading infrequently used data and batch load raw data to a defined landing zone into ADLS. Note: we also see a few customers use Azure blob for this. This frees up space in the current enterprise data warehouse.

Step 2: Collect cloud application and streaming data generated by machines and sensors, directly to ADLS instead of staging it in a temporary file system or a data warehouse.

Step 3: Discover and profile data stored on ADLS. Profile data to better understand its structure and context. Adding requirements for enterprise accountability, control and governance for compliance with corporate and governmental regulations and business service level agreements.

Step 4: Parse and prepare data from weblogs, application server logs or sensor data. Typically, these data types are either in multi-structured or unstructured formats which can be parsed to extract features and entities, and data quality techniques can be applied. You can execute pre-built transformations and data quality and matching rules natively in Microsoft Azure HDInsight to prepare data for analysis.

Step 5: After cleansing and transforming data on Microsoft Azure HDInsight, move high-value curated data to ADLS or to Microsoft Azure SQL Data Warehouse. From there, users can directly access data with BI reports and applications. Informatica BDM provides the below set of functionalities to implement a data lake on Azure:

- Ease of deployment: Informatica BDM is listed in the Microsoft Azure Marketplace. Customers can get started with a single click. This single click deployment offering installs Informatica BDM on Microsoft Azure VM and integrates with the Microsoft Azure HDInsight cluster.

- Connectivity: Informatica BDM provides read and write functionality to connect to any on premises databases, cloud sources, Microsoft Azure blob, Azure Data Lake Store(ADLS), Azure SQL Data Warehouse, Azure SQL Database, SQL Server, etc.

- Data Preparation: Informatica BDM provides rich data integration and data quality functionality to prepare the data on Hadoop.

- Hadoop Integration: Informatica BDM can push the data integration and data quality jobs to Microsoft Azure HDInsight or any other Hadoop distributions like Cloudera or Horton works on Azure.

Watch this video to see Informatica Big Data Management in action to accelerate building a Data Lake on Azure. With Informatica’s market-leading AI-driven data lake management solutions you can drive actionable insight with your big data. Together with Microsoft Azure, it helps you to accelerate your data-driven digital transformation.

For more information go to:Informatica Data Lake Solutions for Microsoft Azure HDInsight and Data Lake Store