Why Is Connectivity Foundational for a Data Platform?

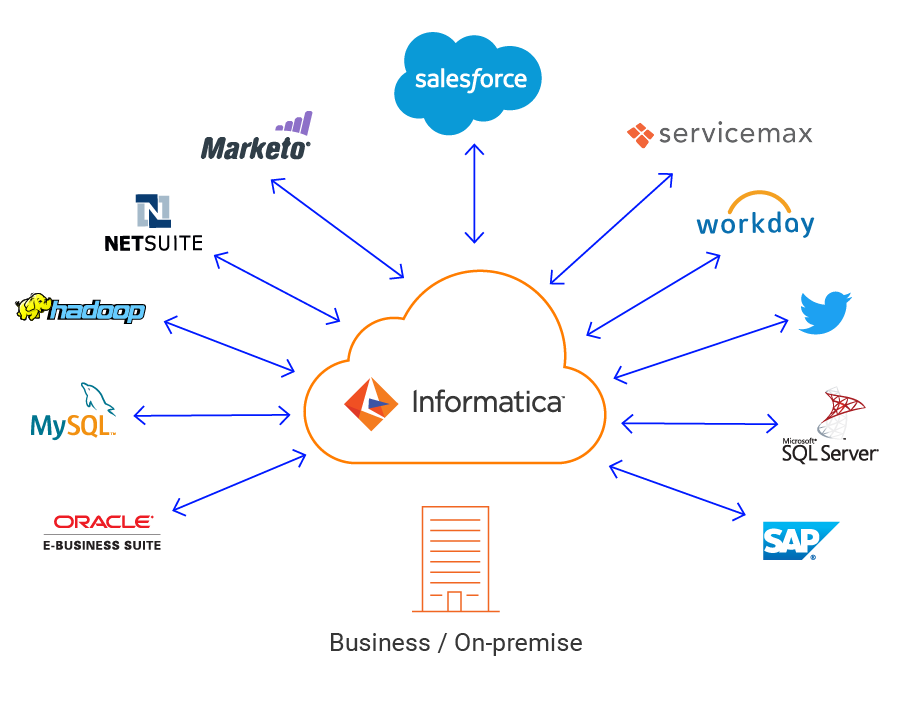

If you take any data processing pipeline – be it for data integration, data quality, data matching and mastering, data security, data masking or a B2B data exchange pipeline – data is read from somewhere and written somewhere for further consumption. All data processing pipelines start and end with connectivity. In addition to reading and writing data, there is a need for mid-stream connectivity, for example, a lookup use case. Accessing data is a necessity for any data-driven initiative; that’s why connectivity is one of the foundational components of Informatica’s Intelligent Data Platform (IDP).

My last blog focused on the 10 principles of IDP. This blog explores the essential capabilities of data connectivity. Connectivity must be:

- Broad: Where does your data live in your organization? In the cloud? On-premises? Inside applications? In relational databases? In NoSQL databases? Inside data warehouses and analytical systems? On file systems such as CSV, Excel, or other special format files? Inside object stores like S3? On the message buses? Do you use data from social media? How about IoT data? Probably the answer is “most of the above” or “all of the above.” We’ll refer to these various data sources and targets as data endpoints. Would you change your data endpoints if your data processing software does not have connectivity to some of them? Of course not. That’s why connectivity to EVERYTHING is a must. Connectivity must be broad and ubiquitous.

- Flexible and secure: There are myriad ways to establish physical connections with your data endpoints. Data connectivity must support various connection, authentication, and authorization mechanisms as well as security protocols so that you can choose the way you connect to your data in a secure fashion.

- Pattern aware: Data endpoints differ widely: ODBC, JDBC and proprietary interfaces for databases; REST, SOAP and proprietary interfaces for applications, Sqoop for big data when reading from relational sources; and file system interfaces for file and object store files. Data connectivity must support the most prevalent and performant APIs to your data endpoints. If you are working with streaming or real-time data endpoints, connectivity must follow all the best practices of real-time semantics, including order preservation, delivery guarantee, and minimizing memory footprint, to name a few. If you are working with SaaS endpoints, you may be charged each time you access data. The ingestion cost may be very different from query cost. Connectivity must be intelligent enough to work economically in such situations.

- Agile: APIs for many data endpoints, especially SaaS applications, keep evolving. Data connectivity must continue to evolve and support the latest and – in many cases – multiple prevalent versions.

- Intelligent and adaptive: Connectivity must learn the schema and structure of the data. Data may be flat/relational or hierarchical; it may have full schema, some schema, or no schema at all. For example, when reading data from CSV files, it may not even have column headers. It must be able to infer schema, types, and the domain of the data. In some use cases, the schema of the source may change from one run to the next. Connectivity should be able to handle such changes gracefully. During development and testing cycle of the data processing pipeline, dev/test copies of data endpoints are used. Once the data processing pipeline is ready for production, it is pointed to the production version of data endpoints. Connectivity must allow proper parameterization of data endpoints.

- Highly performant: Since storage is the slowest component compared to CPU, memory, and network, reading and writing can become the bottleneck of your data processing pipeline if connectivity is not highly performant.

- Low impact on data endpoints: Connectivity must be highly efficient when reading data from your system of record. Otherwise, you will bring your system of record to a crawl. Similarly, when loading data into a data warehouse, it is usually faster to stage data and use warehouse-specific commands to load data in the most efficient manner.

- Catalog aware: Connectivity needs to understand the schema, structure, and domain of all data endpoints. There is a wealth of technical metadata that can be used by a data catalog. Check out the blog on active metadata by my colleague Awez Syed to understand how to maximize the benefits of this metadata foundation.

Connectivity is so essential, we’ve built Informatica’s Intelligent Data Platform so that organizations have access and delivery to all enterprise data (on-premises or cloud), quickly, easily, and cost-effectively with out-of-the-box, high-performant connectors.

I’ll write more in a future blog post about pushdown optimization and partitioning capabilities related to connectivity.

Learn more about the Informatica Intelligent Data Platform. And explore blueprints for building an adaptive foundation for data management in our eBook, “Build Your Next-Generation Enterprise Architectures.”