Find out how change data capture (CDC) detects and manages incremental changes at the data source, enabling real-time data ingestion and streaming analytics.

What Is Change Data Capture?

Change data capture (CDC) is a set of software design patterns. It allows users to detect and manage incremental changes at the data source. CDC technology lets users apply changes downstream, throughout the enterprise. CDC captures changes as they happen. This requires a fraction of the resources needed for full data batching. Data consumers can absorb changes in real time. This has less impact on the data source or the transport system between the data source and the consumer. With CDC technology, only the change in data is passed on to the data user, saving time, money and resources. CDC propagates these changes onto analytical systems for real-time, actionable analytics.

Why Change Data Capture Matters in the Modern Enterprise

Today, data is central to how modern enterprises run their businesses. Data has become the key enabler driving digital transformation and business decision-making. Modern data architectures are on the rise. Companies are moving their data from on-premises to the cloud. This includes cloud data warehouses and data lakes. They are shifting from batch, to streaming data management. But they still struggle to keep up with growing data volumes, variety and velocity. New cloud architectures are addressing these challenges. They include cloud data warehouses, cloud data lakes and data streaming.

But the shelf life of data is shrinking. When data is time-sensitive, its value to the business quickly expires. Real-time data insights are the new measurement for digital success. When a company can’t take immediate action, they miss out on business opportunities. This issue is referred to as “perishable insights.” Perishable insights are data insights that provide exponentially greater value than traditional analytics, but the value expires and evaporates quickly. Log files, machine logs, IoT, devices, weblogs and social media all have perishable data. How can you be sure you don’t miss business opportunities due to perishable insights? You need a way to capture data changes and updates from transactional data sources in real time. Change data capture (CDC) is the answer.

The Benefits of Change Data Capture

CDC captures changes from database transaction logs. Then it publishes changes to a destination such as a cloud data lake, cloud data warehouse or message hub. This has several benefits for the organization:

Greater efficiency:

With CDC, only data that has changed is synchronized. This is exponentially more efficient than replicating an entire database. Continuous data updates save time and enhance the accuracy of data and analytics. This is important as data moves from master data management (MDM) systems to production workload processes.

Faster decision-making:

CDC helps organizations make faster decisions. It's important to be able to find, analyze and act on data changes in real time. Then you can create hyper-personal, real-time digital experiences for your customers. For example, real-time analytics enables restaurants to create personalized menus based on historical customer data. Data from mobile or wearable devices delivers more attractive deals to customers. Online retailers can detect buyer patterns to optimize offer timing and pricing.

Lower impact on production:

Moving data from a source to a production server is time-consuming. CDC captures incremental updates with a minimal source-to-target impact. It can read and consume incremental changes in real time. The analytics target is then continuously fed data without disrupting production databases. This opens the door to high-volume data transfers to the analytics target.

Improved time to value and lower TCO:

CDC lets you build your offline data pipeline faster. This saves you from the worries that come with scripting. It means that data engineers and data architects can focus on important tasks that move the needle for your business. It also reduces dependencies on highly skilled application users. This lowers the total cost of ownership (TCO).

Methods for Change Data Capture

There are several types of change data capture. Depending on the use case, each method has its merit. Here are the common methods and how they work, along with their advantages and disadvantages:

| CDC Method | How It Works | Advantages | Disadvantages |

|---|---|---|---|

| Timestamp CDC | Leverages a table timestamp column and retrieves only those rows that have changed since the data was last extracted. |

|

|

| Triggers CDC | Defines triggers and lets you create your own change log in shadow tables. | Shadow tables can store an entire row to keep track of every single column change. They can also store just the primary key and operation type (insert, update or delete). |

|

| Log-based CDC | Transactional databases store all changes in a transaction log that helps the database to recover in the event of a crash. With log-based CDC, new database transactions — including inserts, updates, and deletes — are read from source databases’ transactions. Changes are captured without making application-level changes and without having to scan operational tables, both of which add additional workload and reduce source systems’ performance |

|

|

Change Data Capture Use Cases

CDC captures changes from the database transaction log. Then it publishes the changes to a destination. Data destinations may include a cloud data lake, cloud data warehouse or message hub. A traditional CDC use case is database synchronization. Real-time streaming analytics and cloud data lake ingestion are more modern CDC use cases.

Traditional Database Synchronization/Replication

Often data change management entails batch-based data replication. Given the growing demand for capture and analysis of real-time, streaming data analytics, companies can no longer go offline and copy an entire database to manage data change. CDC allows continuous replication on smaller datasets. It also addresses only incremental changes.

Imagine you have an online system that is continuously updating your application database. With CDC, we can capture incremental changes to the record and schema drift. So, when the customer returns and updates their information, CDC will update the record in the target database in real time. In a consumer application, you can absorb and act on those changes much more quickly. It takes less time to process a hundred records than a million rows. You can also define how to treat the changes (i.e., replicate or ignore them).

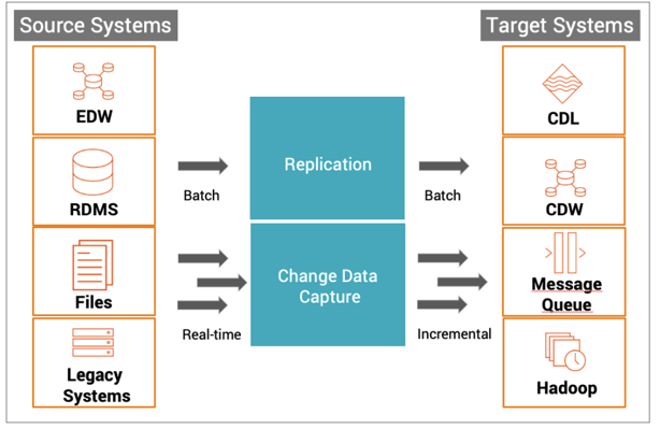

Figure 1: Change data capture is depicted as a component of traditional database synchronization in this diagram

Modern Real-Time Streaming Analytics and Cloud Data Lake Ingestion

The most difficult aspect of managing the cloud data lake is keeping data current. With modern data architecture, companies can continuously ingest CDC data into a data lake through an automated data pipeline. This avoids moving terabytes of data unnecessarily across the network. You can focus on the change in the data, saving computing and network costs. With support for technologies like Apache Spark for real-time processing, CDC is the underlying technology for driving advanced real-time analytics. You can also support artificial intelligence (AI) and machine learning (ML) use cases.

Real-Time Fraud Detection

For data-driven organizations, customer experience is critical to retaining and growing their client base. A good example is in the financial sector. If a large bank faces a sudden increase in fraudulent activities, they need real-time analytics to proactively alert customers about potential fraud. Transactional data needs to be ingested from the database in real time. CDC with ML fraud detection can identify and capture potentially fraudulent transactions in real time. Then it can transform and enrich the data so the fraud monitoring tool can proactively send text and email alerts to customers. Then the customer can take immediate remedial action.

Figure 2: Change data capture is a key part of real-time fraud detection in this reference architecture diagram

Real-Time Marketing Campaigns

Real-time analytics drive modern marketing. For example, here's an example in the retail sector. A site visitor explores several motorcycle safety products. The retailer sees the customer's viewing pattern in real time. They display the most profitable helmets first. If the customer is price-sensitive, the retailer can dynamically lower the price.

They can also track real-time customer activity on mobile phones. CDC can capture these transactions and feed them into Apache Kafka. They can read the streams of data, integrate them and feed them into a data lake. With offline batch processing, the company can correlate real-time and historical data. When matched against business rules, they can make actionable decisions. They can deliver the next-best-action, all while the customer is still shopping.

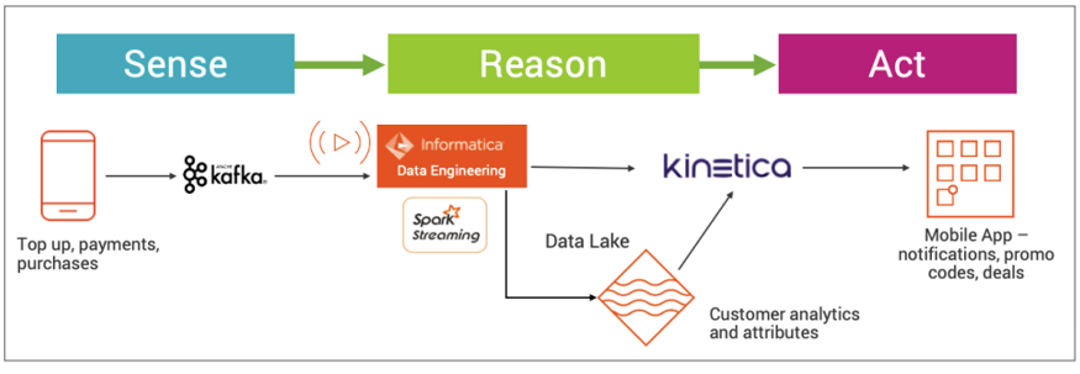

Figure 3: Change data capture feeds real-time transaction data to Apache Kafka in this diagram

ETL for Data Warehousing

Extract Transform Load (ETL) is a real-time, three-step data integration process. It combines and synthesizes raw data from a data source. The data is then moved into a data warehouse, data lake or relational database. CDC minimizes the resources required for ETL processes. This is because CDC deals only with data changes. CDC extracts data from the source. Then it transforms the data into the appropriate format. Next, it loads the data into the target destination. CDC enables processing small batches more frequently. Processing just the data changes dramatically reduces load times. It also uses fewer compute resources with less downtime. It shortens batch windows and lowers associated recurring costs. CDC also alleviates the risk of long-running ETL jobs.

Relational Transactional Databases

Companies often have two databases — source and target. You first update a data point in the source database. Next you should reflect the same change in the target database. With CDC, you can keep target systems in sync with the source. First, you collect transactional data manipulation language (DML). Then you collect data definition language (DDL) instructions. These can include insert, update, delete, create and modify. Keep target and source systems in sync by replicating these operations in real-time.

Customer Case Studies for Change Data Capture

KLA is a leading maker of process controls and yield management systems. Their customers are semiconductor manufacturers. They needed better analytics for their growing customer base. They also needed to perform CDC in Snowflake. They looked to Informatica and Snowflake to help them with their cloud-first data strategy. Cloud Data Ingestion and Replication delivered continuous data replication. Change data was moved into their Snowflake cloud data lake. They were able to move 1,000 Oracle database tables over a single weekend. This made 12 years of historical Enterprise Resource Planning (ERP) data available for analysis. They also captured and integrated incremental Oracle data changes directly into Snowflake.

A leading global financial company is the next CDC case study. The company and its customers shared an increasing number of fraudulent transactions in the banking industry. They needed to be able to send customers real-time alerts about fraudulent transactions. They put a CDC sense-reason-act framework to work. They ingested transaction information from their database. A fraud detection ML model detected potentially fraudulent transactions. The financial company alerted customers in real-time. Real-time streaming analytics data delivered out-of-the-box connectivity. The dream of end-to-end data ingestion and streaming use cases became a reality.

Summary

Technologies like change data capture can help companies gain a competitive advantage. CDC helps businesses make better decisions, increase sales and improve operational costs. Selecting the right CDC solution for your enterprise is important. Informatica Cloud Data Ingestion and Replication (CMI) is the data ingestion and replication capability of the Informatica Intelligent Data Management Cloud (IDMC) platform. CMI delivers:

- Intelligence

- Integration within an end-to-end AI-powered data management solution. Track, capture, and update changed data in real-time with automatic schema drift support to accelerate database replication and synchronization use cases

- ETL and ELT

- Part of a best-in-class ETL and ELT engine to process data and handle change data management in the most efficient way

- Flexibility

- Ability to capture changes to data in source tables and replicate those changes to target tables and files

- Ability to read change data directly from the RDBMS log files or the database logger for Linux, UNIX and Windows

- Change data capture included for these sources and targets:

- Oracle

- SQL server

- PostGresSQL

- Salesforce

- Kafka

- Snowflake

- Azure Synapse

- Amazon Redshift

- Databricks Delta

- Google BigQuery

- IBM Db2

- Real-time functionality

- A streaming pipeline to feed data for real-time analytics use cases, such as real-time dashboarding and real-time reporting

Next Steps

Technologies like CDC can help companies gain competitive advantage. CDC helps businesses make better decisions, increase sales and improve operational costs. CDC lets companies quickly move and ingest large volumes of their enterprise data from a variety of sources onto the cloud or on-premises repositories.

To learn more about Informatica CDC streaming data solutions, visit the Cloud Data Ingestion and Replication webpage and read the following datasheets and solution briefs: