Change Data Capture: The Triangle Offense of the Data World

The fundamental shift from on-premises to cloud is disrupting traditional methods of handling, analyzing, and processing data. Modern cloud data management is enabling companies to take advantage of the exponential growth of data and provides benefits such as performance, availability, cost, manageability, and flexibility. You can quickly build an analogy with this disruption in technology to the world of sports. Let me give you an example in the game of basketball.

If you follow basketball, then you know about the success of the Chicago Bulls during the 1990s. They had the best players and coach, who helped them win many championships. But apart from that, there was one thing that made them successful – a modern and disruptive style of playing the game.

The Chicago Bulls adopted a method called “the triangle offense.” It is an offensive strategy where the goal is to fill five spots (shown in the diagram below), creating proper spacing between players and allowing each one to pass to four teammates. Every pass and cut has a purpose, and the defense dictates everything.

Data is the new lifeblood of every company’s business model

Data has gone from something cumbersome and expensive to store, to becoming the lifeblood of every company’s business model. An IDC study predicts that the world will generate about 90 zettabytes in 2020, more than all the data produced in the last 10 to 15 years. And it will increase to 175 zettabytes by 2025. The following trends have got us to this point:

- Connected devices, apps, and systems are generating more data than ever before.

- Cloud is driving down the cost of storage, and customers no longer need to decide what data to keep and what data to throw away.

- With the cloud providing pay-as-you-go and on-demand compute, organizations can quickly analyze their data to get insights into the varieties of different ways.

If you can store every relevant data point about your business (which could grow to a massive volume) and you can analyze all of your data in different ways and distill the data down to insights for your business, it will fuel innovation in your organization that can lead to competitive advantage.

But in the real world, customers are facing new challenges in managing and analyzing their data. We hear from customers all the time that they are looking to extract more value from their data, but they struggle to capture, store, and analyze all the data generated by today’s modern and digital businesses. They need the flexibility to analyze the data in a variety of ways, using a broad set of analytics engines to meet existing and future analytics use cases. And customers also need to go beyond insights from operational reporting on historical data, to be able to perform machine learning and real-time analytics to predict future outcomes accurately.

Next-generation technologies like real-time change data capture, ingestion, and streaming analytics are the key elements of the triangle offense in the world of data that help organizations overcome these challenges by identifying risks in real-time and act on them. In this blog post, we will describe these two emerging technologies and their real-world uses cases.

What is change data capture?

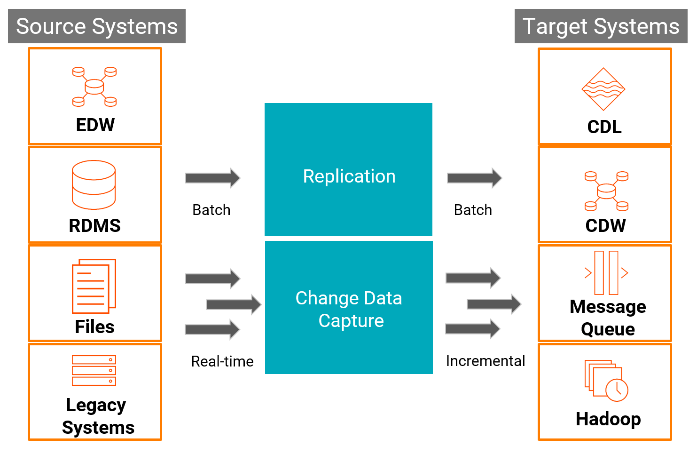

Change data capture (CDC) is a design pattern that allows users to detect changes at the data source and then apply them throughout the enterprise. For example, you have two databases (source and target), and you update a data point in the source database. Now, you would like to have the same change to be reflected in the target database. With CDC, you can collect transactional data manipulation language (DML) and data definition language (DDL) instructions (for example, insert, update, delete, create, modify, etc.) to keep target systems in sync with the source system by replication of these operations in near real-time.

CDC is used for continuous, incremental data synchronization and loading. CDC can keep multiple systems in sync with data changes (insert, update, delete) as well as monitor the source data for schema changes. CDC can dynamically modify the data stream to accommodate schema changes, which is essential for different types of data coming in from live data sources. CDC continuously captures real-time changes in data in seconds or minutes. The data is then ingested into target systems such as cloud data warehouses and data lakes or cloud messaging systems, helping organizations to develop actionable data for advanced analytics and AI/ML use cases.

How can Informatica help in driving real-world uses cases for streaming analytics on CDC data?

Streaming data is creating a new set of industry use cases that were not possible without the ability to do stream processing and analytics. Streaming analytics has a wide variety of use cases in significant industries, such as retail, manufacturing, healthcare, and banking.

As you can see in the fraud detection use case in the banking industry, the customer is running a machine learning model on the data coming from transactional systems. Change data from transactional systems are captured using CDC streaming techniques, ingested onto messaging systems, and machine learning models are operationalized on the streaming data so that their customers can be alerted about the potentially fraudulent transactions in real time – which helps them take remedial actions. It also helps the banks to reduce the potential risks due to litigation from the customers.

Informatica offers the Sense-Reason-Act framework for real time streaming data management. This framework provides end to end data engineering capabilities to ingest real-time data, apply enrichments on the data in real-time or in batches, and operationalize the actions on the data in a single platform using a simple and unified user experience.

The Informatica Cloud Mass Ingestion (CMI) Service provides database ingestion capabilities that help you to ingest initial and incremental loads from relational databases such as Oracle, SQL-Server, and MySQL onto cloud data lakes and cloud data warehouses as well as messaging hubs. It also offers schema-drift capabilities to help customers manage the changes in the schema automatically and also provides real-time monitoring on ingestion jobs with lifecycle management and alerting capabilities.

With Informatica Data Engineering Streaming (DES), customers can continuously ingest and process data from a variety of streaming sources by leveraging open source technologies like Apache Spark and Apache Kafka. DES provides out of the box capabilities to parse, filter, enrich, aggregate, and cleanse streaming data while also helping operationalize machine learning models on the streaming data. With these capabilities, customers can perform real-time analytics on CDC data to address their streaming analytics use cases.

Summary and next steps

With change data capture and real-time streaming analytics technologies, organizations can solve business challenges by capturing, integrating, analyzing, and reporting the data as it comes in. Applying real-time analytics on CDC data lets you understand what’s working and what’s not working for you at that very moment. The insights provided by real-time analytics are based on current data— the inability to act of them can lead to operational failures and customer dissatisfaction.

Join us at the upcoming live webinar Change Data Capture for Real-Time Data Integration and Streaming Analytics to learn more from the experts. Additionally, download the new whitepaper on change data capture.