Guide to CI/CD and DevOps for Big Data Engineering Management

Last Published: Mar 18, 2025 |

In today’s modern software development world, adoption of DevOps and CI/CD have become widespread, but their definitions are often confused and consequently they can be misused. In this post, I define each process and explain how Informatica Big Data Management (BDM) integrates with CI/CD and DevOps pipelines in one integrated environment.

What Do CI and CD Mean?

Continuous Integration (CI), is a software development practice in which all developers merge code changes in a central repository multiple times a day. Continuous Delivery (CD), adds the practice of automating the entire software release process.

With CI, each change in code triggers an automated build-and-test sequence for the given project, providing feedback to the developer who made the change.

CD includes infrastructure provisioning and deployment, which may be manual and consist of multiple stages. What’s important is that all these processes are fully automated, with each run fully logged and visible to the entire team.

What Is DevOps?

DevOps is a culture shift or a movement that encourages communication and collaboration to build better-quality software more quickly with more reliability.

DevOps organizations break down the barriers between Operations and Engineering by cross-training each team in the other’s skills. This approach leads to higher quality collaboration and more frequent communication.

How Do DevOps and CI/CD Work Together?

For Agile methodology which focuses on collaboration, customer feedback, and rapid releases, DevOps plays a vital role in bringing development and operations teams together.

Today’s development according to Agile practices couldn’t be considered without CI. It became easier to make changes within the software development through infrequent version releasing as development and operations teams can collaborate easily with CI.

CI/CD with Big Data Management

Informatica Big Data Management provides support to all the components in the CI/CD pipeline. It supports version control for versioning and use of the infacmd command line utility to automate the scripts for deploying. When you build a CI/CD pipeline, consider automating three different aspects of development: Versioning, Collaboration, and Integration & Delivery.

Versioning

Informatica developers create and update objects like mappings, workflows, applications and data objects as part of their daily activities. When you have multiple developers working on the same objects, it is critical for developers to version control these objects.

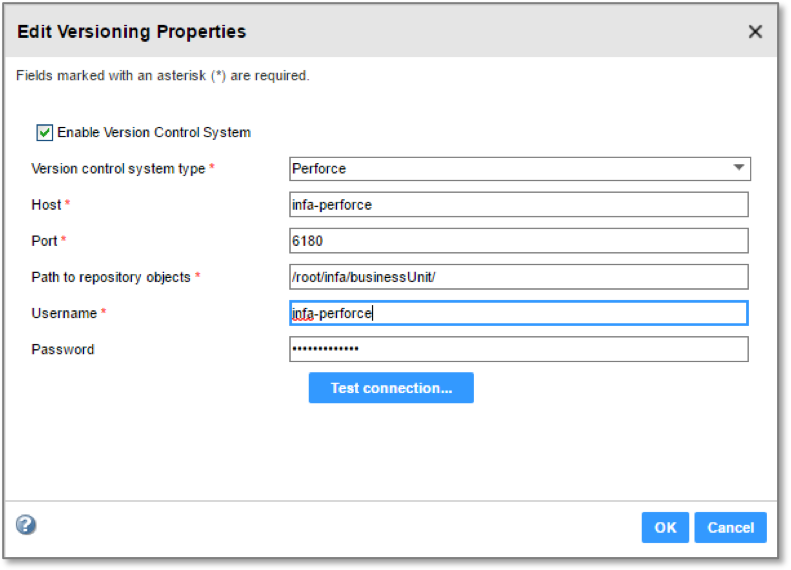

In the recent releases, Big Data Management can integrate multiple version control applications like Git, SVN, Perforce, etc.

When you enable version control in Big Data Management, objects in the model repository service (MRS) are version controlled, enabling developers to check in and check out objects to the version control repository. When version control is enabled on MRS, developers can use the following functionality:

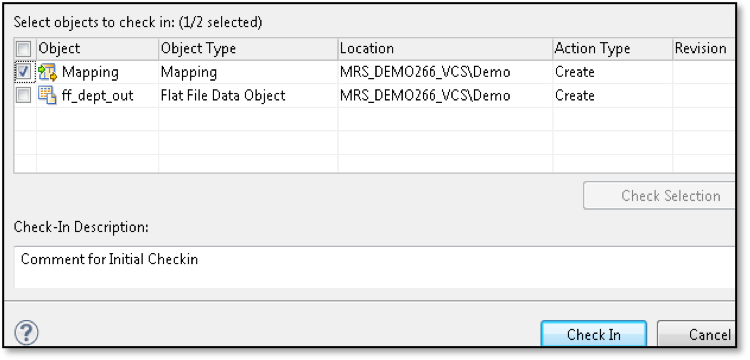

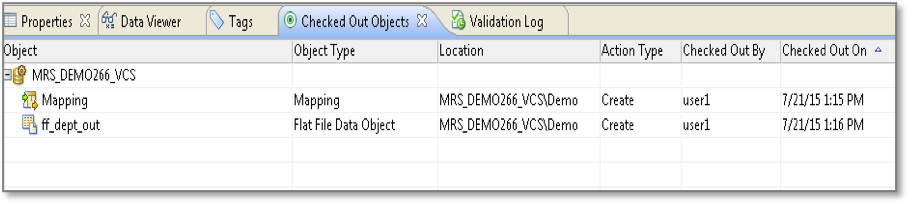

- Ability to check in / check out objects in MRS

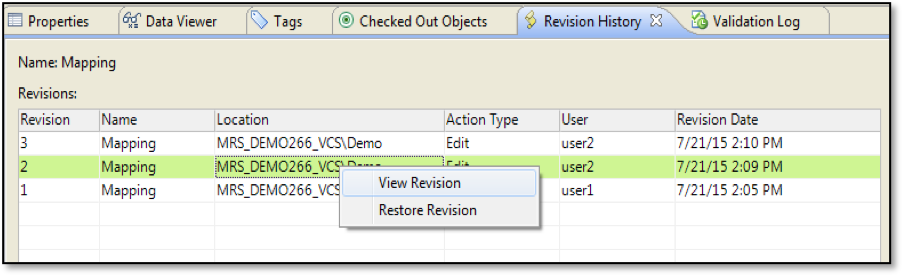

- Ability to maintain versions of an object in MRS. The current version of objects can be stored in MRS and previous versions can be stored in version control.

- Version-based extraction for deployment

- Ability to compare different objects in version control

- Ability to compare various versions of objects

Collaboration

Big Data Management offers simple drag-and-drop functionality. It is easy for developers to build a complex mapping with a lot of transformations and mapping logic. As the mapping grows over time with multiple steps integrated, it is common for multiple users to work on the same mapping or workflow. With version control, multiple developers work on the same set of objects without any conflicts.

You can achieve the following goals for better collaboration:

- Ability to have multiple developers work on the same set of objects

- Ability to work on multiple release vehicles in parallel

- Ability to collaborate between various personas such as developers and release managers

- Automated conflict detection

- Automated conflict resolution

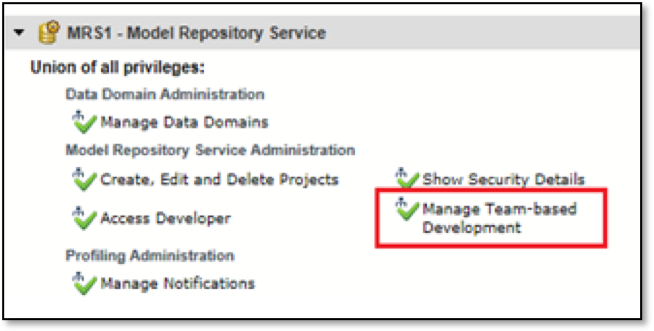

- Additional privilege to manage team-based development

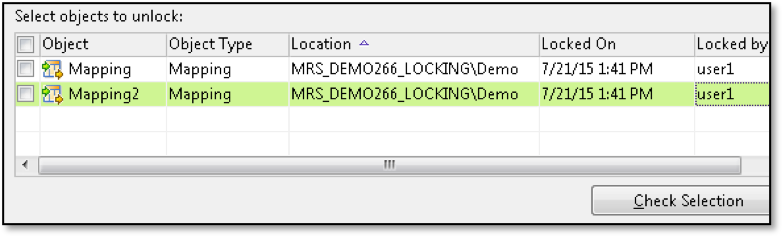

- Ability to unlock objects locked by users

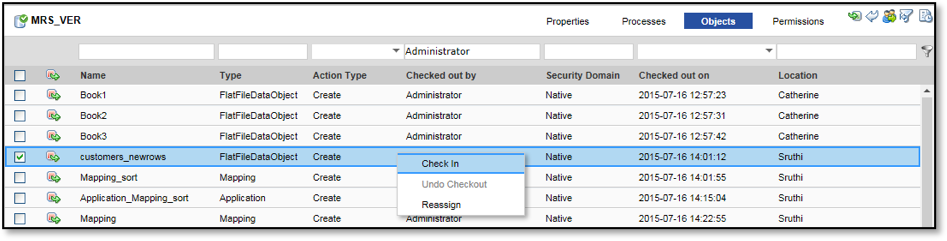

- Ability to check in checked-out objects

- Ability to reassign the objects to different users

Continuous Delivery

Continuous delivery is an important aspect that allows users to automate the end-to-end pipeline for CI/CD. Using Big Data Management, users can create an archive file for the BDM application that can be deployed on the data integration service (DIS) in any environment. Big Data Management provides these functionalities for Continuous Delivery:

- Ability to automatically deploy code from one environment to another without impacting other objects

- Ability to automatically run test cases for objects deployed

- Ability to take a course of action for successful and unsuccessful test results

- Ability to update a tracking system (such as Jira / ServiceNow) to keep track of the overall workflow

Deployment Best Practices with Big Data Management

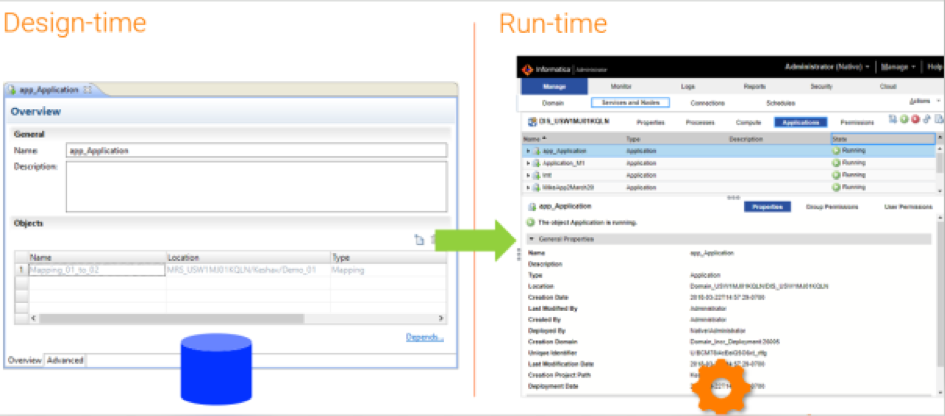

Objects in a Big Data Management development project are classified into design time objects and runtime objects. Design time objects are the objects that the developer builds in the Developer tool and stores in the MRS. The objects that are deployed from MRS to DIS are called the run-time objects. You can build, update, and view design-time objects on the Informatica Developer client. You can view and manage run-time objects on the Informatica Administrator tool.

Design-time objects consist of projects, folders, mappings, and workflows. Run-time objects consist of deployed applications, mappings, and workflows inside the applications.

To deploy, you select the objects to package them into an Application Archive File (.iar). Informatica provides command line utilities like infacmd which can be used to create the .iar files. These infacmd commands can be used in your automation scripts for DevOps.

Once you create the .iar file, you can deploy the .iar file to any DIS environments like Dev, QA, Prod, etc.

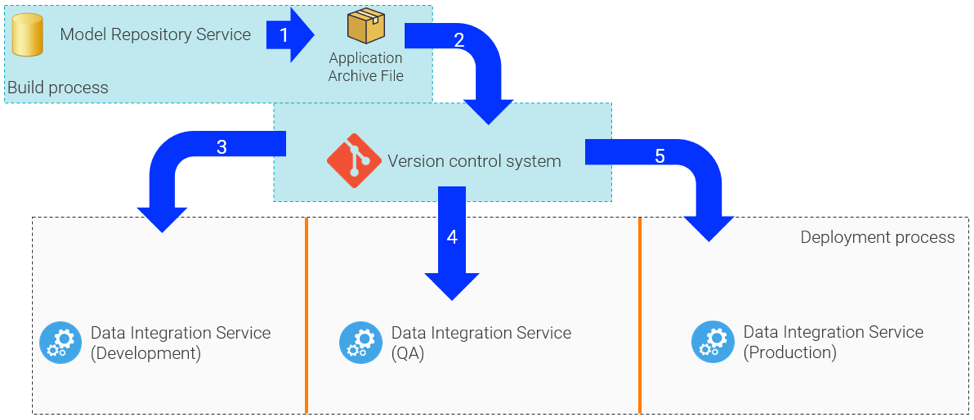

Below is the CI/CD Deployment best practice architecture for Big Data Management:

DevOps with Big Data Management

As explained in the deployment process, the DevOps flow for Big Data Management has two steps: build process flow and deployment process flow.

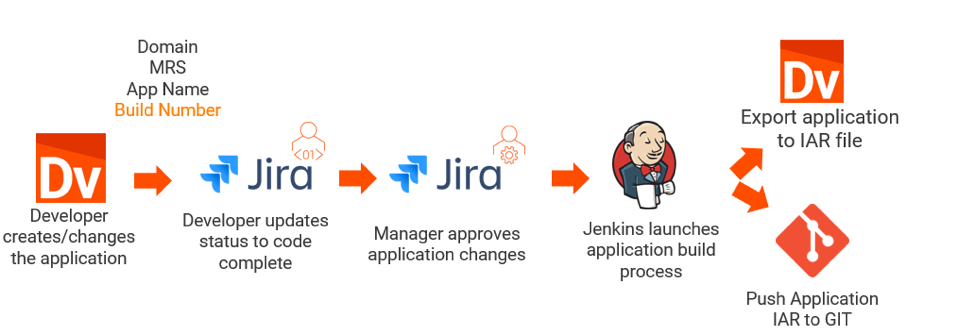

In the following build and deployment process flows, we use Jira as the ticketing system, Jenkins as the build system, and Git as the version control. You can build these flows with any available source control, ticketing, and build tools in the market.

Build Process Flow:

In an Agile environment, developers are assigned Jira tickets for their daily activities. When the developer creates/updates an application, he/she updates the Jira ticket with relevant details like the domain name, model repository name, application name, and build number. The development or build manager can review the changes the developer made and approve the changes. Once the status of the Jira ticket is changed, Jenkins can identify this change on Jira and trigger a build. In the Jenkins build, we can have scripts to create a corresponding .iar file for the application the developer worked on. Jenkins can automatically pull the application name and MRS details from the Jira ticket.

A Jenkins script can also push this .iar file to version control.

Deployment Process Flow

When the application is ready to be deployed to the DIS, a developer can create a deployment request on Jira. In the Jira ticket, the developer can identify the application, DIS, and the build number to deploy against.

The deployment manager can review this request and approve this ticket on Jira. Once the status of Jira is changed to “Approved,” Jenkins can automatically trigger a job to pull the .iar file from version control and deploy the .iar to the DIS that the developer gave in the Jira ticket.

Putting It All Together to Create CI/CD Pipelines

CI/CD and DevOps are critical for delivering faster results in an efficient way for any modern-day software development project. It is important to understand what each piece means in CI/CD and how DevOps can be applied to successfully implement CI/CD pipelines. Informatica Big Data Management provides the best of solutions for customers to be compliant to any CI/CD and DevOps tools available in the market. CI/CD and DevOps is a critical area that Informatica is investing heavily in. With the latest releases of Informatica Big Data Management, customers can create and deploy the most complex CI/CD pipelines with the various utilities that comes with the product.

Additional Resources

Check out the following resources for more information on DevOps and CI/CD for Big Data Management.

- Meet the Experts Webinar: CI/CD and DevOps for Big Data Management

- Informatica Network Big Data Management Community blog posts: