What Is DataOps?

DataOps, which stands for data operations, is a modern data management practice to streamline and optimize the design, deployment and management of data flows, between data managers and consumers.

It may be called "DataOps," but it’s all about the people, processes and products. A high-performing DataOps practice iterates the processes, connects the people and delivers the products that accelerate business value by transforming massive amounts of raw data into a strategic business asset.

By leveraging best practices such as an incremental approach to innovation, ongoing stakeholder collaboration, multiple process automation and quality-by-design, DataOps delivers data integration workflows with an emphasis on speed, quality, reliability, accessibility and effectiveness.

The primary outcome is easy and timely access to business-ready insights for smarter decision-making.

Why Is DataOps Important?

The Data Integration Challenge for Growing Enterprises

Companies have been generating more data, in various formats, such as structured, unstructured, semi-structured, streaming and batch. This data streams in from multiple sources and flows into many systems, often causing data to fragment, lose quality or context, and even get lost in the layers and folds of the many systems.

But more data has never been the end goal. The quest for modern organizations is to get business users trusted, high-quality data in the right format at the right time, so they can use the insights to make smarter decisions.

Unfortunately, this has always been easier said than done. And no one knows this better than data professionals.

In the past, transforming data into insights has meant a long journey from raw to consumable data. Data teams had to move vast sets of raw data from siloed environments into cloud data lakes for transformation. Once data is cleansed, it is then moved into cloud data warehouses, where analytics can be run to surface business insights.

When executed at an enterprise scale, these data integration processes can quickly get complex and inefficient. Simple requests from business users could take days, or even weeks to fulfill, instead of hours and minutes, leading to competitive vulnerabilities.

While all organizations acknowledge the transformative power of good data, operational challenges have made it increasingly hard to leverage data as a strategic business asset. This defeats the very purpose of data management!

Enter DataOps.

DataOps Removes Data Pipeline Bottlenecks

Business demands for data analytics are often urgent and unforeseen. They occur in an environment where the volume, velocity and variety of data are growing exponentially.

Data engineers cope by creating adhoc pipelines in response to urgent business requests, often hand-coding without proper documentation, or using non-compatible tools just to get data moving. This results in duplication of workflows and pipelines, poor documentation, lack of version control and other challenges that impact data quality, governance, budgets and project timelines.

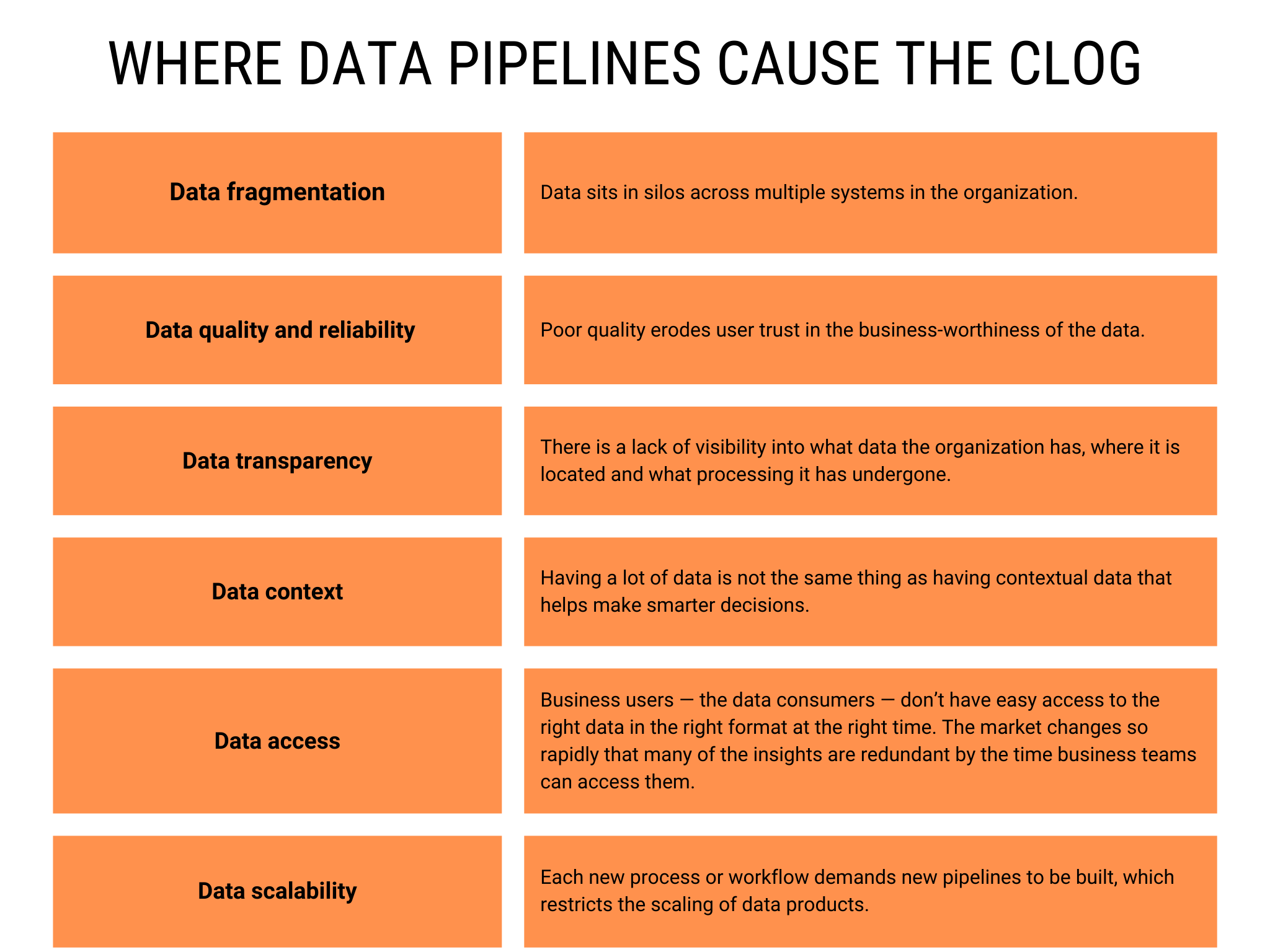

As managing, storing, transforming and tracking data from diverse sources becomes overwhelming and unwieldy, data pipelines often get clogged and become a major bottleneck for data integration workflows.

Data and analytics leaders at organizations realized that a powerful solution to the clogged data pipelines problem was needed. The DataOps practice has thus emerged to simplify and facilitate data integration workflows. It combines the best of DevOps, agile and lean methodologies to address data integration challenges and unlock the full power of data as a strategic business asset.

This article explains how DataOps can transform your data integration initiatives. It then defines the steps to set up high-performance DataOps, inspiring you with best practices, examples and ideal use cases to start delivering business value with data in your organization.

How Does DataOps Work?

The 4 Core DataOps Proccesses

DataOps comprises four core processes that work in tandem to deliver a better data experience for all stakeholders.

1. Data integration: Create a unified view of fragmented and distributed organizational data with seamless, automated and scalable data pipelines. The goal is to efficiently find and integrate the right data without any loss in context or fidelity.

2. Data management: Automate and streamline data processes and workflows throughout the data lifecycle - from creation to distribution. Agility and responsiveness during the data lifecycle are key to effective Data ops.

3. Data analytics development: Enable data insights at speed and scale with optimal, reusable analytics models, user-centric data visualization and ongoing innovation to continuously improve data models.

4. Data delivery: Ensure all business users can use data when it is most needed. This is not just about efficient storage, but also timely data access with democratized self-service options.

Do You Need a DataOps Practice?

DataOps is a relatively new component of data management. However, it is fast becoming a staple for data-driven organizations with an ever-growing number of data-dependent systems and teams.

If any of the below describe the environment your business operates in, it is time to consider setting up a DataOps practice:

A complex data ecosystem: Large organizations generate massive amounts of data each day. Do you have multiple sources generating structured, semi-structured and unstructured data? Is your data spread across different business systems and cloud or hybrid environments? Do you need to start streamlining and improving the efficiency of data processes to handle this growing complexity?

A data-driven approach to business: Data-mature organizations view data as a strategic business asset. Are there multiple stakeholders with diverse data analytics requirements, all adding up to a high number of data projects? Do you need a way to enable collaboration between these stakeholders and enhance the speed and quality of these data projects so that they don't become bottlenecks to data-powered decision-making?

Highly regulated industries: All businesses that collect and use data are subject to several legal regulations and compliance standards. However, those operating in highly regulated industries such as healthcare or financial services, have an even greater need to ensure data governance and transparency. Do you need a more efficient system to ensure the data is always secure and compliant?

DataOps vs. DevOps

DataOps is often confused with the more familiar DevOps. However, they are not the same thing. While both emphasize automation, collaboration and continuous improvement, DevOps is primarily focused on software development and delivery, while DataOps is focused on data analytics workflows, data pipeline development and delivery. As a result, their goals are also different. DataOps aims to unlock the full business value of data by improving data quality, speed of delivery, governance and user access to, and adoption of, data analytics while DevOps aims to develop and deliver new software with minimal bugs and deployment challenges.

What Are the Benefits of DataOps?

Here are the key benefits of DataOps and why you should employ it your organization:

Dissolves data integration blocks: DataOps identifies and eliminates redundancies and bottlenecks in data integration and analytics workflows. Efforts to boost data team efficiency and resource optimization include streamlining workflows, shortening data refresh cycles and reusing and automating data pipelines.

Elevates data quality and reliability: Baking a ‘quality-by-design’ approach into end-to-end data workflows minimizes security and compliance risks. Robust and automated documentation, change management tracking and data governance ensure data democratization without vulnerabilities.

Engages stakeholders: A collaborative stakeholder-led approach that involves data, IT, quality assurance and business teams helps DataOps design the most effective data products and fosters joint accountability for data-driven insights as a business asset.

·Empowers business users: DataOps designs user-friendly data visualizations, dashboards and self-serve options to help data consumers easily access and use data. This is especially crucial for time-sensitive functions such as marketing, sales and customer service.

Drives innovation: Data products that are flexible enough to scale and evolve with business needs come from a continuous iterative approach to innovation and improvement in both the product and the process. Continuous improvement is at the heart of DataOps.

What Defines High-Performing DataOps Practice?

Defining the traits of a high-performance DataOps practice is a good place to start with your own DatOps vision. Establishing a DataOps practice is a strategic decision and requires a committed, long-term approach that is business, people, process and data centric.

Business-centric: Focused on creating data products to solve real business use cases and harness emerging opportunities, DataOps teams constantly innovate and iterate to surface and deliver new, more strategic insights.

People-centric: Accessible and responsive to data consumer needs, DataOps teams function not just as support, but also as a strategic part of business teams, balancing the business need for speed, agility and access with the IT team’s need for control and governance.

Process-centric: A continuous improvement approach delivers optimized data pipelines and efficient, streamlined processes through the data life cycle, from data source to integration, transformation, management, analytics and delivery.

Data-centric: Workflows are designed with data journeys, context and upstream and downstream impact in mind. High-quality, high-fidelity business-ready data can only come with a complete understanding of the nature of the data being handled, and baking data quality, governance and compliance into each step of the journey.

5 Steps to Implement Your DataOps Vision

1. Set up the team: Leadership buy-in, championed by the chief data and analytics officer, is needed to create a committed DataOps team that will oversee the data journey through its lifecycle, streamline the data integration and management workflows, align stakeholder priorities and own the development of new and innovative analytics products.

The best DataOps teams are cross-functional and bring diverse skills and perspectives. Roles typically include data scientists, analytics experts, data engineers, data analysts, data governance stewards and business liaison persons.

2. Identify the key stakeholders: Who are the key data consumers and what are their priorities and expectations? Aside from IT, data and business teams, also consider DevOps, quality assurance and operations teams. Put in place an engagement framework that enables regular discussion, collaboration and feedback loops with these stakeholders so that they remain equal partners in the DataOps initiative.

3. Define the scope of work and responsibilities: Guided by the data journey and business priorities of stakeholders, define the scope and reach of the DataOps team. This includes clarity on data integration, management, analytics development and data product deliverables.

Typically, the scope of work for DataOps teams would include:

- Streamlining and automating data pipelines: Create the systems for seamless data loading and ETL/ELT across systems and formats. Automate data integration processes to enable the reuse of pipelines, greater speed, fewer errors and scalability.

- Streamlining data workflows: Identify repetitive processes, process inefficiencies and gaps in collaboration, tracking and documentation.

- Creating and delivering data products: Make user-friendly data analytics products easily and securely available across the organization.

- Orchestrating the deployment of new data products: Integrate data products with current systems and ensure new incoming data is continually integrated for real-time insights.

- Establishing a data governance program: Define and execute the principles, standards and practices for the collection, storage, use, protection, archiving and deletion of data.

- Defining an iterative approach: Define a practical methodology to continuously experiment, manage feedback, iterate and innovate data management practices.

4. Define the DataOps stack: Identify and deploy the right tools and platforms to help achieve deliverables.

5. Define SLAs: Set milestones, metrics and KPIs in consultation with stakeholders, from data, engineering, IT and business teams, to ensure data quality and availability SLAs are met.

DataOps Best Practices to Elevate Outcomes

DataOps teams also perform the intangible role of the data champion in the organization. This involves going beyond the basics to infuse DataOps best practices into their deliverables.

Build a holistic data culture: DataOps aims to take data, data-related workflows and data outcomes out of their silos and into a connected data ecosystem that is efficient and powerful. This enhances outcomes by:

- Taking a connected approach to the people, processes, operations and technologies that enable better data analytics development.

- Focusing on performance and quality optimization of the entire initiative rather than focusing on the productivity of a single entity or a stand-alone project.

- Monitoring data pipelines around customer SLAs and tying results back to business to measure the value of DataOps.

- Investing in data literacy efforts to educate and empower users to make the most of their analytics tools and unlock the full value of data.

Build pipelines for data quality: Leverage lean principles, such as statistical process control (SPC) measures, to create data pipelines with quality baked into each stage of the workflow, and flag any anomalies. Building business data glossaries and catalogs for change management and self-service will ensure a robust and scalable data infrastructure, even if data volume and variety is growing and evolving.

Leverage Agile methodologies: Realize value with an incremental approach to innovation. Incorporate continuous feedback loops, experiment with incubate-test-iterate approaches to find new relationships between data and verify if it conforms to business logic and operational standards. Design for growth, evolution and scalability. See Figure 1.

Figure 1. Methodologies defined.

Top DataOps Use Cases

DataOps helps data engineers and integration specialists build and operationalize high-quality, trusted data pipelines and make them consumption-ready for analytics and AI projects. At the business end, DataOps enables strong data-led outcomes by delivering products that business users can leverage in practice.

The most common use cases for DataOps include:

1. Streaming analytics: In any situation where a non-stop flow of unstructured and semistructured data needs to be analyzed in real time, you need streaming pipelines to leverage data in motion. For example, a home products company may have a continuous inflow of IoT data, a cosmetics retailer may have to monitor a non-stop global flow of social media feeds and an airline may need to track and analyze a global inflow of weather data to plan and optimize operations. Without DataOps, managing such vast sets of streaming data can become chaotic and lead to poor decision-making.

2. Data engineering: Core data management processes of ETL, ELT and reverse ETL need to be scaled seamlessly to manage high-volume workloads. For example, data may need to be scaled quickly and frequently from a regional level to a national level for various campaigns. DataOps enables data scaling for live production workflows, ensuring high volumes of data are processed efficiently, without loss of integrity.

3. Data observability: Monitoring the overall health of data pipelines supporting enterprise-scale analytics, AI and ML projects is essential for the timely detection and resolution of issues and vulnerabilities. DataOps frameworks help data engineers ensure these mission-critical pipelines are in a healthy state and take immediate action as and when issues occur.

4. FinOps: Running multiple AI projects or LLM models can quickly see cloud computing costs spiral out of control. DataOps puts in place dashboards to track consumption of GPU or compute usage across departments and helps manage, measure and optimize cloud data management costs.

5. Data science and AI: Building AI/ML models and training LLMs models for generative AI apps are entirely dependent on a steady flow of high-quality, trusted data. DataOps builds and operationalizes data pipelines from data ingestion to integration, to ensure the right data sets are fed to these ambitious projects.

Essential Tools for DataOps

Like any initiative, DataOps requires tools and platforms working behind the scenes to enable flawless data management throughout the data life cycle. What used to be manual and custom-made, taking months to execute, can now be automated, replicated and scaled in days and hours thanks to these empowering DataOps tools. It is no surprise that the DataOps platforms market was valued at $3.9 billion in 2023 and is set to grow at a CAGR of 23% from now to 2028.

What Do DataOps Tools Do?

Robust data management tools help DataOps teams:

- Optimize collaboration

- Automate processes

- Ensure data quality and governance

- Develop and deliver analytics products.

Many tools also enable version control, access control, automated documentation and catalogs, monitoring and resource optimization, and self-help and self-heal features. Most tools today are also cloud-native and AI-powered to enhance speed and reliability.

The best tools not only build operational excellence through reliable data delivery but also improve productivity through multiple process integrations and automation. Ultimately, because they avoid the cost of manual operations and ad-hoc interventions, build reusable models and enable scale, the right tools can also positively impact DataOps cost-effectiveness.

5 Core Capabilities of Comprehensive DataOps Tools

1. Orchestration: Connectivity, workflow automation, lineage, scheduling, logging, troubleshooting, alerting

2. Observability: Monitoring live/ historic workflows, insights into workflow performance, cost metrics and impact analysis

3. Environment management: Infrastructure as code, resource provisioning, environment repository templates, credentials management

4. Deployment automation: Version control, release pipelines, approvals, rollback, recovery

5. Test automation: Business rules validation, test scripts management, test data management

Stand-alone vendors typically offer a limited scope and focus on specific functionalities such as observability, testing or pipeline automation, and established data management platforms that have added DataOps capabilities. It is widely recommended to use DataOps tools that give you a single view of diverse data workloads across heterogeneous systems (e.g., operational/ analytics, on-premises/ cloud, batch/real-time) and programmable interfaces (e.g., API, iPaaS, managed file transfer), with orchestration, lineage and automation capabilities. Also, consider compatibility with your current and near-future data stack.

The Future of DataOps

DataOps is here to stay and is widely seen as a must-have for any data-first organization with a modern data stack. Some trends impacting the growth of DataOps include:

- A growing alignment and interoperability with complementary data management practices such as MLOps and ModelOps.

- The emerging notion of PlatformOps, which has been described as a comprehensive AI orchestration platform that includes DataOps, MLOps, ModelOps and DevOps.

- AI gradually starting to augment nearly every aspect of DataOps, leading to growth in AI-powered DataOps.

High-performance DataOps teams are set to be a strategic differentiator for data-powered businesses, well acknowledged as a key enabler of enterprise data transformation. When done right, using the best of agile, lean and DevOps practices, companies see a concrete improvement in the data teams’ agility, speed and efficiency.

Next Steps

To go deeper into DataOps and learn how you can create a future-proof DataOps practice primed to work with AI and MLOps, get your copy of Informatica’s latest whitepaper Lead Your Data Revolution: Unlocking Potential with AI, DataOps and MLOps.