How to Modernize Your Data Architecture With Databricks and Informatica Change Data Capture

Last Published: Sep 16, 2025 |

Co-authored by Prasad Kona, Lead Partner Solutions Architect, Databricks

Your data architecture can make or break your business. Now, more than ever, it’s critical to upgrade your data architecture to beat the competition. Watch the webinar to learn why Databricks and Informatica are your best bet for success.

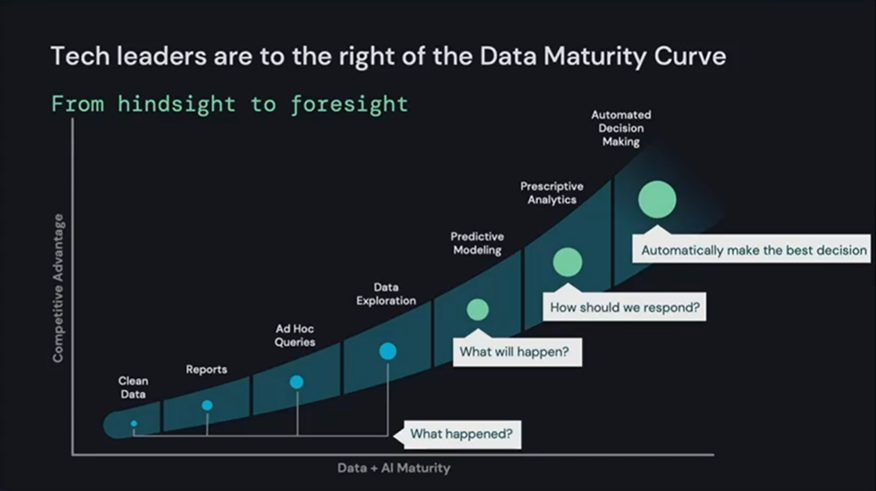

In the past, organizations spent a significant amount of time cleaning data to ensure it was deemed high quality for critical decision-making. As data and analytics evolved (as shown in Figure 1), data engineers used ad hoc queries and data exploration to drill into the same data for even more detailed information. And today? To stay relevant in a crowded market, modern enterprises are now leveraging predictive modelling to implement prescriptive analytics to speed decision-making for a competitive advantage.

What makes this possible? Modern data architectures like cloud data warehouses and cloud data lakes. Let’s see how Databricks can help you implement an architecture that will help you stay ahead of the curve.

How Databricks Simplifies Your Data Stack

Data lakes and data warehouses store different types of data. Data warehouses store structured, filtered data that has already been processed for a specific purpose. Data lakes store raw data for a yet defined purpose. To conduct advanced analytics and AI use cases, many organizations process data from both data lakes and data warehouses in parallel. The downside? These architectures are disparate and incompatible. Enter Databricks.

The Databricks Lakehouse platform merges data warehouses and data lakes into one data platform. By combining the reliability, strong governance and performance of data warehouses with the openness, flexibility and machine learning (ML) support of data lakes, the Databricks Lakehouse Platform delivers the best of both worlds. This unified approach simplifies your modern data stack by eliminating data silos that traditionally separate and complicate data engineering, analytics, business intelligence (BI), data science and ML.

Databricks enables you to access data from both data warehouses and data lakes on a common platform, but how can you be sure that the data is as current and accurate as possible? Let’s find out.

Capitalize on Real-Time Change Data Capture

Data is time-bound; its value diminishes over time. And when a company can’t take immediate action based on fresh data, they can miss out on lucrative business opportunities or make decisions based on outdated data. Not ideal given today’s dynamic landscape.

To deliver game-changing outcomes, you need a tool that captures data changes and updates from transactional data sources in real time so you are in the know, always. Fortunately, change data capture (CDC) can help.

CDC allows you to detect and manage incremental changes at the data source, as they happen. CDC lets you apply changes downstream, throughout the enterprise. This requires a fraction of the resources needed for full data batching. With CDC, only the change in data is passed on to the target, which saves time, money and resources. From there, CDC propagates these changes onto analytical systems for real-time, actionable analytics so you have accurate, up-to-date data.

Let’s look at how combining a CDC tool, like Informatica CDC services, with Databricks can help you make better decisions and improve operational costs.

Informatica CDC for Databricks

As part of the Intelligent Data Management Cloud™ (IDMC), Informatica CDC services capture the change in data from applications (like SaaS and ERP data), relational databases, files and streaming sources (like Kafka, Internet of Things [IoT] and click streams), and replicates it directly into Delta Lake, which is part of the Databricks Lakehouse platform.

Let’s walk through how this happens.

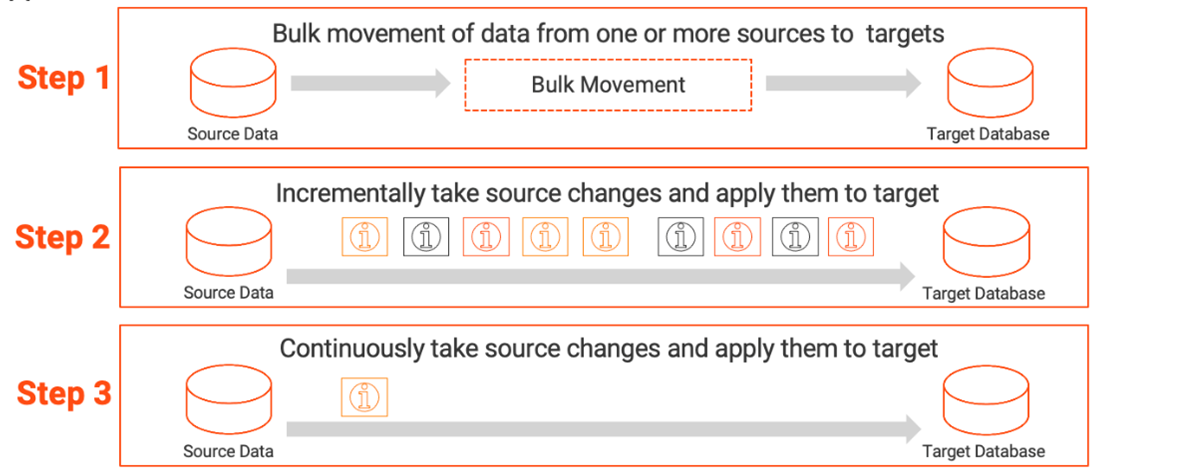

As shown in Figure 2, here is the process in three distinct steps:

- Step 1: First, the data is ingested in bulk by Informatica CDC from the source(s) to Databricks Delta Lake.

- Step 2: Next, only the incremental changes in the source(s) data are captured and applied into Databricks Delta Lake.

- Step 3: The source data changes are captured continuously by Informatica CDC and applied to Databricks Delta Lake.

Voila! The replicated data is now up to date so it can be leveraged for your analytics use cases, which can help speed up decision making and lower operational costs.

Let’s explore other advantages Informatica CDC brings to the table.

Top Benefits of Using Informatica CDC

Informatica CDC can quickly move and ingest a large volume of enterprise data from a variety of sources onto the cloud or on-premises repositories for processing and reporting — or onto messaging hubs for real-time analytics. Let’s review the top benefits of using this capability.

- Boosted efficiency: With Informatica CDC, only data that has changed is synchronized. This is dramatically more efficient than replicating an entire database. Continuous data updates save time and improve the accuracy of data and analytics.

- Accelerated decision-making: Given today’s volatile economy, making smart decisions quickly can separate the disruptors from the laggards. Having the ability to find, analyze and act on data changes in real time empowers you to create personal digital experiences for your customers. For example, real-time analytics can help retail organizations efficiently manage their supply chain and keep their inventory up to date.

- Lower impact on production: Moving data from a source to a production server is time-consuming. Informatica CDC captures incremental updates with a minimal source-to-target impact and can read and consume incremental changes in real time. The analytics target is then continuously fed data without disrupting production databases. This opens the door to high-volume data transfers to the analytics target, which decreases the workload.

- Faster time to value: Informatica CDC lets you build your offline data pipeline faster. This gives data engineers and data architects the freedom to focus on mission-critical tasks that can make a real difference for your business. Finally, Informatica CDC reduces dependencies on highly skilled application users, which lowers the total cost of ownership.

Bottom line? Informatica CDC helps accelerate business innovation, which can give you the competitive edge necessary to succeed in our high-pressure economy. And when combined with Databricks, the possibilities of success are endless.

Next Steps

Ready to learn how Informatica and Databricks can help you modernize your data architecture for real-time analytics? Watch the webinar to discover capabilities, key use cases and a real-world success story.